Below I described a few different adaptation methods. "x" represents the distance between an RBF and the input point. I tested some of these ideas in a simple PyGame application and posted some screenshots below.

Method 1

Move the RBF closer to the input point in proportion to x. The farther away the RBF is from the input point, the faster it will move towards it. In other words, given input that stays in the same place over time, all the RBFs will reach it at the same time.

This method can be made more local by only considering RBFs within some radius from the input. The following image shows units being adapted towards the mouse pointer in my PyGame app. Adaptation is proportional to x.

You can see how all of the units beyond a certain radius do not move at all.

Method 2

If we adapt the RBF positions based on a Gaussian function...

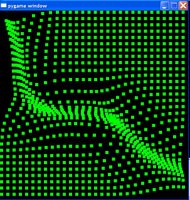

...the RBFs will move towards the input point faster as they approach it. This is desirable because more distant points will not be affected as much. The following screenshot from my PyGame application demonstrates this using a Gaussian-based adaptation function. I moved the mouse pointer (which determines the input point) around in a smooth curve.

The problem with these approaches is that the RBFs tend to clump together near the inputs... which is fine if your goal is to reach a set of discrete clusters, but not if you're trying to approximate some function. In my case, I'm usually trying to represent a state space for a physically-simulated creature, so I don't want the RBFs to clump together so much. I do want them to move towards the input points (presumably increasing the representation's resolution where it matters), but not so much that they're totally covering the exact same area.

Method 3

I tried a hybrid approach which combines the simple linear function of Method 1 and the Gaussian function of Method 2. By simply multiplying the Gaussian and linear functions, I got the following:

This looks like it would help because the RBFs adapt slowly at large distances, quickly at medium distances, then slow down again as they approach the input. This is, in fact, what it does, but I still get the clumping effect I was trying to avoid. The following picture shows the PyGame app using this hybrid approach. It's hard to tell the difference between this and the plain Gaussian method just by looking at the picture; the main difference is in how quickly the RBFs approach the input.

So now I have a few methods to self-adapt RBF centers. (Of course, these have probably already been described elsewhere, but sometimes it's fun and enlightening to figure these things out yourself.)

The problem remains that the RBFs clump too much. What I need is a good way to keep them a minimum distance apart. A brute-force approach would be to compute the distances between each pair of RBFs, but that's O(n^2) runtime complexity. The classic Kohonen's self-organizing map approach would probably work. To implement that I would simply need to add connections between nearby RBFs, and I could use those local connections to force them apart if they get too close.