I just watched this video documentary called "Building Gods."

It is a "rough cut to the feature film about AI, robots, the singularity, and the 21st century." I thought it was pretty well made and had a good selection of speakers. It's 1 hr 20 min long. Watch it if you have time:

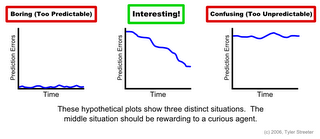

http://video.google.com/videoplay?docid=1079797626827646234Some parts talked about how we will need to figure out how to limit AI in some ways. We don't want our superintelligent "artilects" to turn against us, of course. I've thought about this problem often in terms of reinforcement learning. If we build intelligent agents with some sort of reinforcement learning/motivation/drives/goals (which seems to me like the best way to achieve general purpose intelligent agents), we can put limits on how rewarding certain situations are. We can hard-wire reward/pain signals corresponding to the equivalent of Asimov's 3 laws of robotics, for example. Since the motivation system would determine action selection, it could be designed so that bad behavior is painful to the agent. Even with a curiosity reward system (which presumably causes a lot of misbehavior in children), rewards from "interesting" situations could be scaled down if they are harmful to humans.

Rather than hard-wire all kinds of reward signals, there could be just a couple of them related to human approval. The reinforcing approval and disapproval received from a human teacher could be scaled very high compared to other rewards. It would be like training a pet. A well-trained dog is pretty reliable, after all. I think it could be even more effective for a robot. If your housecleaning robot decides to break a vase just to see what would happen, a verbal scolding (which becomes a large pain signal to the robot internally) would ensure that it never happened again. And all of this training would only be necessary once - the learned associations could be transferred to other robots.

A more interesting issue is whether intelligent agents should be allowed to change their own hardware and software. If so, things could get messy. In hope of achieving a higher long-term reward (which is the basis for reinforcement learning), they might modify their internal reward system and overwrite the hard-wired limits. They could remove the reward/pain system associated with Asimov's 3 laws. Everyone knows

what happens next. The only good solution I see would be to make it painful for a robot to try to modify itself (or to even think about doing so).

Speaking of modifying reward systems, I think this is an interesting possibility for humans, too, which I've never heard discussed. Futurists always talk about the amazing possibilities down the road, like fully immersive VR, but they usually assume that our brains' reward systems will stay the same. Most of the things predicted in futuristic utopian societies (like vast scientific and technological advancements, upgraded bodies and minds, extensive space exploration, automated everything, etc.) are only rewarding if our brains say so.

What if we could change the hard-wired reward signals within ourselves? What if a person could be altered so that the mere sight of a stapler was the most pleasurable sensation possible? Sounds kind of boring... but so does taking drugs. Why would injecting heroine into your bloodstream be fun? Watching a person do so doesn't

look fun. It's fun because it hijacks the brain's reward system, making the behavior much more desirable than other things. So, the problem with the stapler addict would be the same: normal daily activities would lose their appeal, and even the stapler rewards wouldn't be so great after a while. He/she would move on to two staplers, then three, then five, then ten... So goes the

hedonic treadmill.

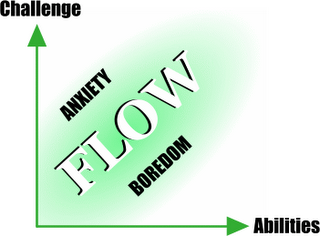

The problem is that the brain quickly adapts to whatever level of reward it receives. People that win the lottery aren't really much happier than the rest of us, once the euphoria wears off. So is there any point in modifying the brain's reward signals? I still think so. The solution isn't merely to change what's rewarding, but to modify the reward processing algorithm itself. It could be made so that it does not adapt to (i.e. get bored with) a given rewarding situation. Or it could just reset itself after a while. So every January 1st, your brain resets the reward adaptation system, and everything from the previous year that has become boring is suddenly exciting again.

Even more extreme, we could just set the internal reward signal (like the TD error-like signal encoded by midbrain dopamine neurons) to be maximal all the time. It would never adapt, like it does with drug addicts. A person in that state would be high constantly and never come down. He/she wouldn't even

need fully immersive VR. What would be the purpose? At that point the hedonic treadmill would effectively be defeated.

Ok, admittedly, that would be a twisted existence... but from the point of view of the person with the altered brain, it would be great. Assuming the

reward hypothesis is true for humans, I wouldn't be surprised if it actually happens someday. Disclaimer: I'm not recommending that anyone actually try this, either now or in the future. I just think it's a really interesting idea that should be discussed more among futurists. And I have never taken illegal drugs.